|

I am a Ph.D. student in Data Science at Seoul National University, under the guidance of Professor Taesup Kim. I completed my Master's studies in the same graduate school and am affiliated with LAAL. Prior to pursuing my Master's degree, I worked as a Data Scientist at LG CNS. I earned my Bachelor's degree in Applied Statistics from Yonsei University. Email / Google Scholar / Github / LinkedIn |

|

My research interests focus on the real-world applications of AI models. I am interested in representation learning and transfer learning, particularly at the intersection of uncertainty (probability) and machine learning. My long-term research goal is to understand, evaluate, and enhance modern AI models, such as pre-trained and large foundation models. To achieve this goal, I develop new theories, algorithms, and applications. Recently, I have been particularly interested in large language models (LLMs) that can refine themselves through self-prediction. |

|

|

|

Kyubyung Chae*, Gihoon Kim*, Gyuseong Lee*, Taesup Kim, Jaejin Lee, Heejin Kim. |

|

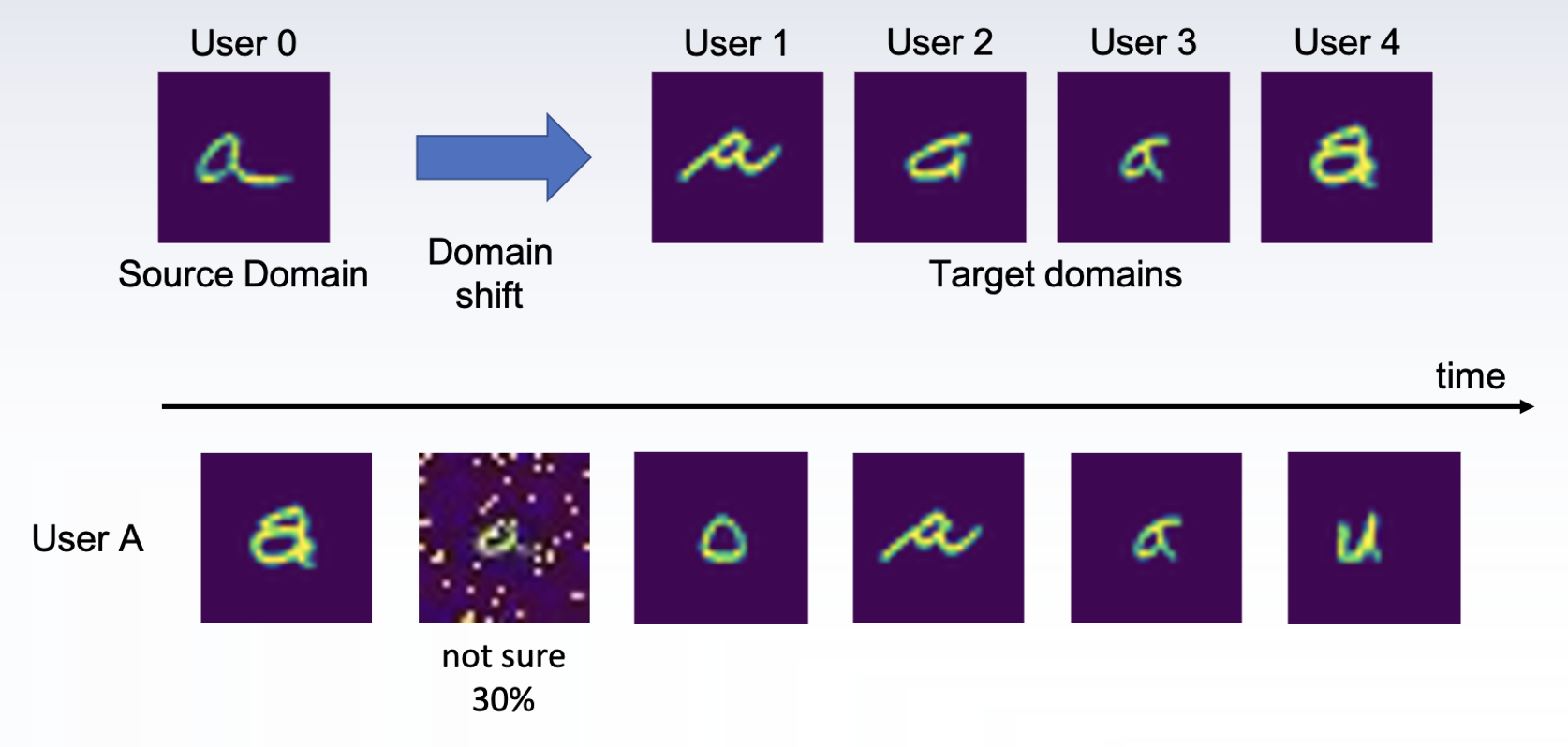

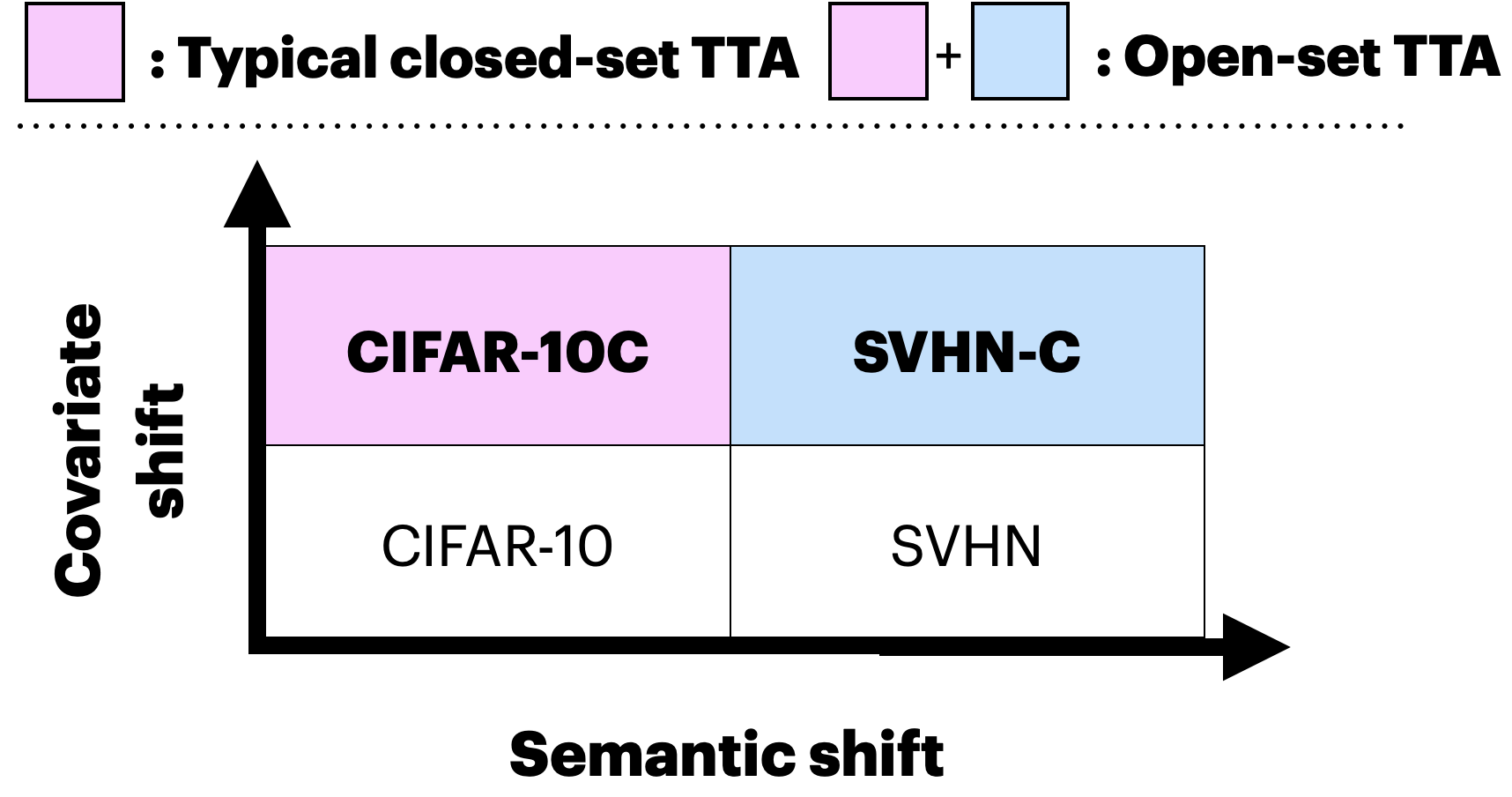

Kyubyung Chae*, Hyunbin Jin*, Taesup Kim. |

|

Juan Yeo, Jinkwan Jang, Kyubyung Chae, Seongkyu Mun, Taesup Kim. |

|

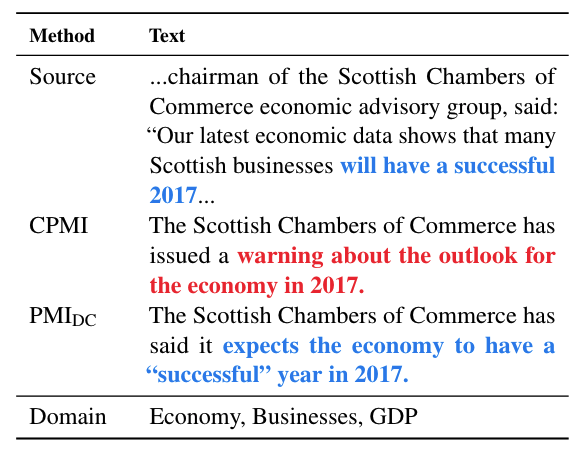

Jaepill Choi*, Kyubyung Chae*, Jiwoo Song, Yohan Jo, Taesup Kim. |

|

Kyubyung Chae*, Jaepill Choi*, Yohan Jo, Taesup Kim. |

|

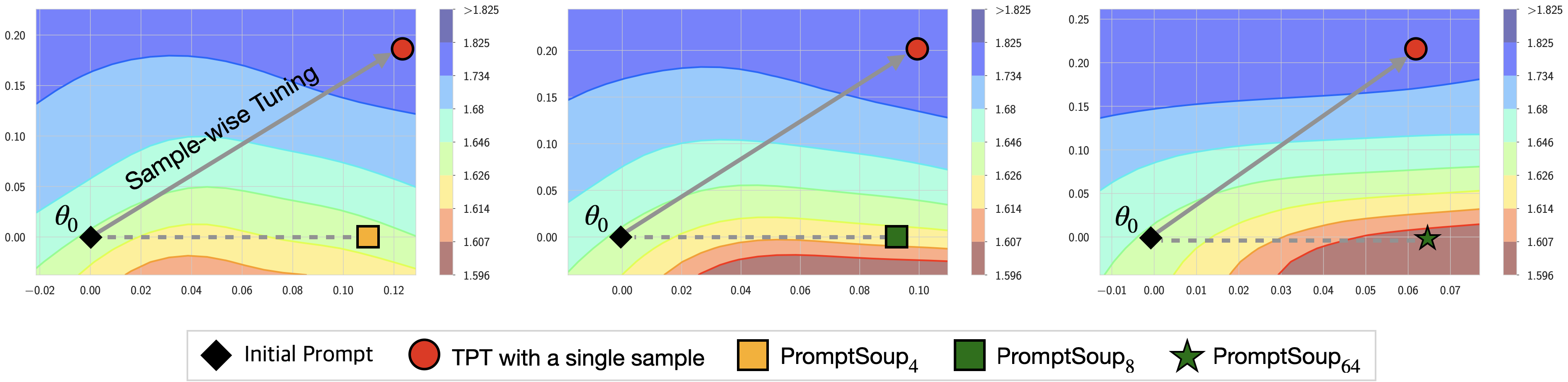

Kyubyung Chae, Taesup Kim. |

|

|

|

Kyubyung Chae, Minsoo Jo, Junseo Hwang, Jinkwan Jang, Taesup Kim. |

|

Yewon Han, Kyubyung Chae, Taesup Kim. |

|

Jaepill Choi, Kyubyung Chae, Jiwoo Song, Taesup Kim. |

|

|

|

|

The source of this website is from here. |